This month’s #tsql2sday is being hosted by Adam Machanic (t|b):

This month’s #tsql2sday is being hosted by Adam Machanic (t|b):

Your mission for month #100 is to put on your speculative shades and forecast the bright future ahead. Tell us what the world will be like when T-SQL Tuesday #200 comes to pass. If you’d like to describe your vision and back it up with points, trends, data, or whatever, that’s fine. If you’d like to have a bit more fun and maybe go slightly more science fiction than science, come up with a potential topic for the month and create a technical post that describes an exciting new feature or technology to your audience of June 2026. (Did I do the math properly there?)

Here is my vision…

As I step into my home office and close the door, the walls and ceiling automatically bring up a live landscape of Grand Teton National Park. My priority tasks are displayed and see today is the day we migrate our entire production environment over to the latest update of SQL Server to take advantage of the latest AI engine built on top of Azure Machine Learning.

The AI engine is going to be our DBA in the back pocket. We will no longer have to deal with monitoring and performance tuning. Ever since the initial automation database tuning release back in 2017, Microsoft has been freeing up DBA resources with each update. Notifications for transactions, indexing, backups, DR/HA, and security tuning are displayed under the integrated PowerBI console on one of the walls of my office. I remember the day I had multiple screens on my desk, now I just move around the room to the various virtual workspaces around the office depending on the project I’m working on.

The AI engine is going to be our DBA in the back pocket. We will no longer have to deal with monitoring and performance tuning. Ever since the initial automation database tuning release back in 2017, Microsoft has been freeing up DBA resources with each update. Notifications for transactions, indexing, backups, DR/HA, and security tuning are displayed under the integrated PowerBI console on one of the walls of my office. I remember the day I had multiple screens on my desk, now I just move around the room to the various virtual workspaces around the office depending on the project I’m working on.

The migration has already been done by the older AI engine countless times under our dev and test instances. With dbatools having been integrated into the SQL engine years ago and expanded to over 3,000 commands, PowerShell is now my primary interface to SQL Server. The developers are still coding in T-SQL when the ORM tool doesn’t behave properly, but for me, PowerShell is where I’m comfortable.

As I start the production migration, open transactions are frozen, databases are moved between memory spaces, and then the transactions are brought back online. Users never even noticed the tiniest of hiccups within their applications. The new SQL AI is already online and bringing up the status of the new instances on my wall, all is well.

The update cycle of SQL Server is still bringing value to us. The AI engine takes cares of the weekly cumulative updates and leaves the monthly feature releases for me to approve. Automatic DR/HA with Azure makes the older AG’s seem like the stone age. Our database, application, and web servers still reside under the control of our data centers, but DR/HA is handled for us.

Now that the migration is done, it’s time for a break. Catch you on the next #tsq2sday!

Doug Purnell

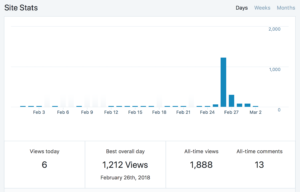

Back in February one of my blog posts was highlighted on Brent Ozar Unlimited’s Weekly Links. Wow, what a bump in traffic! I usually receive a hand full of views a week, but on February 26th it reached 1,212. The cool part was it was actually the #2 link on Brent Ozar Unlimited’s newsletter for the day. If you don’t currently subscribe, here is a link to start.

Back in February one of my blog posts was highlighted on Brent Ozar Unlimited’s Weekly Links. Wow, what a bump in traffic! I usually receive a hand full of views a week, but on February 26th it reached 1,212. The cool part was it was actually the #2 link on Brent Ozar Unlimited’s newsletter for the day. If you don’t currently subscribe, here is a link to start.

The AI engine is going to be our DBA in the back pocket. We will no longer have to deal with monitoring and performance tuning. Ever since the initial automation database tuning release back in 2017, Microsoft has been freeing up DBA resources with each update. Notifications for transactions, indexing, backups, DR/HA, and security tuning are displayed under the integrated PowerBI console on one of the walls of my office. I remember the day I had multiple screens on my desk, now I just move around the room to the various virtual workspaces around the office depending on the project I’m working on.

The AI engine is going to be our DBA in the back pocket. We will no longer have to deal with monitoring and performance tuning. Ever since the initial automation database tuning release back in 2017, Microsoft has been freeing up DBA resources with each update. Notifications for transactions, indexing, backups, DR/HA, and security tuning are displayed under the integrated PowerBI console on one of the walls of my office. I remember the day I had multiple screens on my desk, now I just move around the room to the various virtual workspaces around the office depending on the project I’m working on. I’m honored to have been selected to speak at

I’m honored to have been selected to speak at

Regular blogging is one of my goals this year. One of the areas I struggle with is what content to blog about. While I was listening to SQL Server Radio (

Regular blogging is one of my goals this year. One of the areas I struggle with is what content to blog about. While I was listening to SQL Server Radio (

The Spectre/Meltdown can have a significant

The Spectre/Meltdown can have a significant